Critical Analysis of AI-enabled electrocardiography alerts

Summary of the AI Use Case

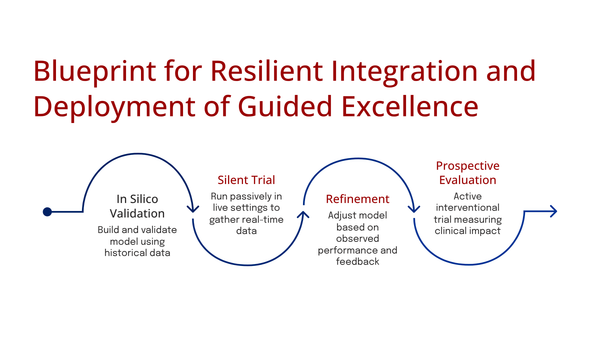

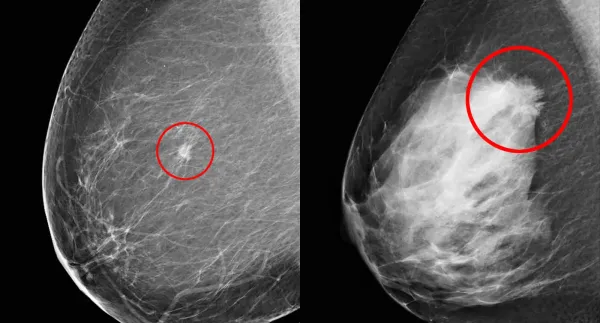

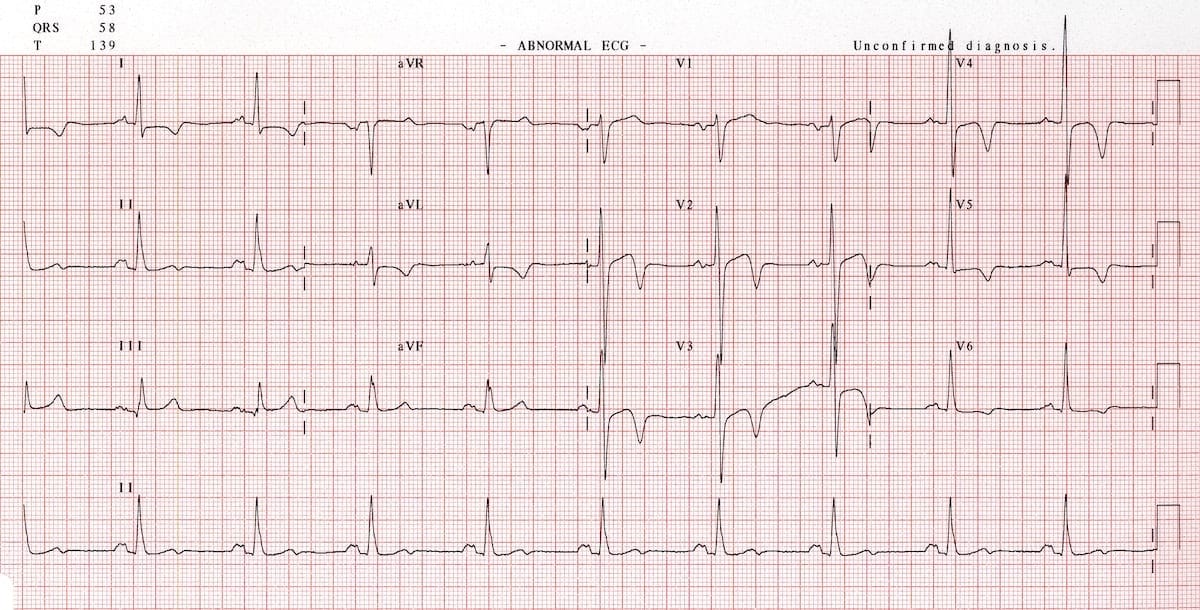

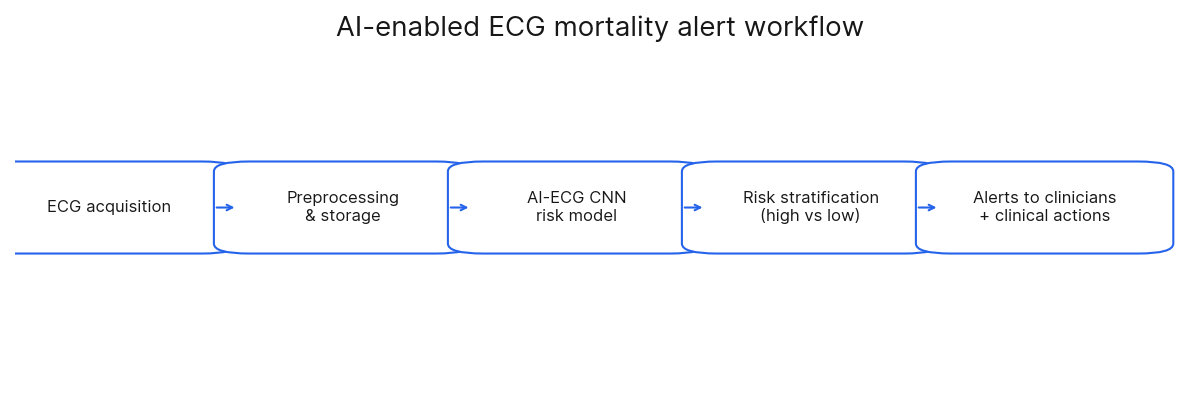

AI-Enabled ECG Alerts and Mortality by Lin CS et al., Nat Med, 2024 evaluated if an AI-enabled electrocardiogram (AI-ECG) could reduce patient mortality by sending warning messages to physicians about individuals at high risk. The AI was designed to find "concealed information" in ECG data, successfully identifying "subtle changes of several underlying cardiovascular diseases" that were not otherwise clinically apparent.

Technical Evaluation

Lin CS et al., used a convolutional neural network (CNN), a deep learning architecture particularly well-suited for signal analysis, running on an NVIDIA DGX-1 high-performance computing system. One-dimensional CNNs are adept at learning a hierarchy of features directly from raw data like an ECG (Goodfellow et al., 2016). In this context, initial layers can learn basic morphological components (e.g., QRS edges), while deeper layers combine these into abstract patterns indicative of complex pathologies (Hannun et al., 2019; Wang et al., 2017). This architecture provides translation invariance, meaning it can recognize a clinically important waveform regardless of its precise location in the 10-second recording. Critically, due to sparse connectivity and parameter sharing, a CNN has far fewer parameters than a fully connected network, making it more computationally efficient and substantially less prone to overfitting high-dimensional signal data (Goodfellow et al., 2016).

This CNN was trained via supervised learning on a massive dataset of "more than 450,000 ECGs" using "all-cause mortality" as the primary label. While using such a broad label introduces "label noise" (as the cause of death may not be cardiac), the model's strong performance in predicting cardiac-specific death (HR=10.78) suggests it successfully isolated the relevant cardiac mortality signal from this noise. A notable omission from the paper, however, is the mortality event rate within the training set, which would be useful for assessing data balance.

The model's predictive performance was excellent. It demonstrated high discriminative ability, achieving an Area Under the Receiver Operating Characteristic Curve (AUC) of 0.886 for 90-day mortality. However, in cases of significant class imbalance, which is common in mortality prediction (i.e., far more survivors than deaths), AUC can be misleadingly optimistic. A superior metric in such scenarios is often the Area Under the Precision-Recall Curve (AUPRC), which focuses on the performance of the model on the minority (positive) class and was not reported in this study. The model's high degree of separability was reinforced by its C-index (a concordance metric for survival data), which was also 0.886, outperforming models built from all other available patient and ECG characteristics. Furthermore, the model showed a strong association with outcomes; the high-risk group identified by the AI-ECG had a Hazard Ratio (HR) of 7.53 for all-cause mortality, indicating a more than seven-fold increase in risk compared to the low-risk group.

Despite this superlative accuracy, CNN's internal decision-making process is not transparent. The features learned by its deep layers are abstract mathematical representations that do not map to discrete, human-understandable clinical concepts. Consequently, clinicians receive a high-risk alert with no mechanistic explanation for why the model arrived at that conclusion. This "black box" nature poses a potential challenge for clinical trust and for determining the most appropriate actionable response.

Implementation, Practical Considerations, & Ethical Analysis

The AI-ECG system was integrated directly into the hospital's clinical workflow. It received ECGs in real-time, processed them on a dedicated NVIDIA DGX-1 server, and delivered results back to clinicians through the existing electronic health record (EHR) and as smartphone notifications. The paper does not name the specific EHR vendor, referring to it generically as the "information system". Upon receiving an alert, physicians were encouraged to perform a comprehensive assessment of the patient; however, the study did not implement a formal system for physicians to provide feedback on the AI's alerts.

The paper acknowledges several real-world challenges and potential biases. These include the risk of alert fatigue, the system's effectiveness being dependent on local hospital policies, and the limitations inherent in relying on EHR data. The study also directly addresses the Hawthorne effect: the possibility that physicians altered their behavior simply because they were being observed; which could mean the mortality reduction might be lower in a non-trial setting. To test the system's robustness pragmatically, the alert threshold was set based on a consensus regarding clinical workload rather than on pure statistical optimization.

A prespecified exploratory analysis was conducted to ensure the intervention's benefits were distributed fairly. The results showed that the "impact of the intervention on mortality risk reduction was largely consistent across subgroups," including age, sex, and various comorbidities, suggesting an equitable benefit for all patients identified as high-risk.

The research team did not have direct contact with patients and relied on existing EHR data, patient consent was waived by the institutional review board. However, informed consent was obtained from all 39 participating physicians before the trial began

Personal Critique & Reflection

The authors concluded that their AI-enabled ECG alert system successfully identified high-risk hospitalized patients and, by alerting physicians, prompted timely clinical interventions that significantly reduced 90-day all-cause mortality. The researchers conducted a pragmatic, single-blind randomized controlled trial (RCT) involving 39 physicians and nearly 16,000 patients across two hospitals. They integrated an AI model that analyzes standard 12-lead ECGs to predict mortality risk into the hospital's workflow.

The number of physicians and patients is adequate. The main concern is that the study's design may not accurately reflect the long-term impact of alert fatigue. While the authors suggest the alert burden was acceptable, scaling this system could lead to significant physician burnout and ignored alerts.

The fundamental challenge with this system is that it is a "black box". The alert tells a physician that a patient is at high risk but provides no specific, actionable reason why. This ambiguity forces physicians to initiate a broad workup. Over time, alerts that consistently fail to reveal an obvious underlying cause risk being ignored, a well-documented phenomenon where 49-96% of clinical alerts are overridden by frontline clinicians (van der Sijs et al., 2006).

When the AI-ECG system's 4-10 monthly alerts are added to a baseline of over 100 alerts per day, their contribution to the overall problem becomes significant. While the absolute number is low, the relative burden is high because each "high risk of mortality" alert demands immediate, high-stakes cognitive engagement, unlike more routine notifications. The risk is that these critical alerts get lost in the noise of the hundreds of less critical alerts a physician must process weekly, contributing directly to the cycle of alert fatigue as is shown in this small selection of a large repertoire of studies on the subject:

Primary care physicians received an average of 77 notifications per day via their EHR inbox, with nearly a third of their time spent on "desktop medicine" (Tai-Seale et al., 2017).

Physicians received a median of 109 alerts requiring a response per day (Observer, 2013).

Physicians received a median of 135 notifications per day, with the top quartile receiving over 200 (Holmgren et al., 2022).

While the AI-ECG alert system demonstrates impressive predictive accuracy for identifying high-risk patients and reducing mortality, its long-term viability is threatened by the pervasive issue of alert fatigue. The "black box" nature of the AI, coupled with the already overwhelming number of daily alerts clinicians face, creates a scenario where even critical warnings may be overlooked. Future iterations of such systems must prioritize explainable AI to provide actionable insights and carefully consider the overall alert burden to ensure that life-saving technology doesn't inadvertently become another source of clinician burnout.

References

Churpek, M. M., Adhikari, R., & Edelson, D. P. (2017). The kosher caprese: A machine learning approach to the early detection of clinical deterioration. Critical Care Medicine, 45(6), 1083–1084. https://doi.org/10.1097/CCM.0000000000002447

DeVita, M. A., Smith, G. B., Adam, S. K., Adams-Pizarro, I., Buist, M., Bellomo, R., Bonello, R., Cerchiari, E., Farlow, B., Goldsmith, D., Haskell, H., Hillman, K., Howell, M., Hvarfner, A., & Kellett, J. (2010). "Identifying the hospitalised patient in crisis"—A consensus conference on the afferent limb of rapid response systems. Resuscitation, 81(4), 375–382. https://doi.org/10.1016/j.resuscitation.2009.12.008

Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT Press.

Hannun, A. Y., Rajpurkar, P., Haghpanahi, M., Tison, G. H., Bourn, C., Turakhia, M. P., & Ng, A. Y. (2019). Cardiologist-level arrhythmia detection and classification in ambulatory electrocardiograms using a deep neural network. Nature Medicine, 25(1), 65–69. https://doi.org/10.1038/s41591-018-0268-3

Wang, Z., Yan, W., & Oates, T. (2017). Time series classification from scratch with deep neural networks: A strong baseline. 2017 International Joint Conference on Neural Networks (IJCNN), 1578-1585. https://doi.org/10.1109/IJCNN.2017.7966037

van der Sijs, H., Aarts, J., Vulto, A., & Berg, M. (2006). Overriding of drug safety alerts in computerized physician order entry. Journal of the American Medical Informatics Association, 13(2), 138–147. https://doi.org/10.1136/jamia.2005.0008

Holmgren, A. J., Downing, N. L., Bates, D. W., Shanafelt, T. D., Milstein, A., & Sharp, C. D. (2022). Assessment of electronic health record use between US and non-US health systems. JAMA Internal Medicine, 182(2), 251-255.

Observer, M. (2013, November). Physicians experience 'alert fatigue' from EHRs. Healthcare IT News.

van der Sijs, H., Aarts, J., Vulto, A., & Berg, M. (2006). Overriding of drug safety alerts in computerized physician order entry. Journal of the American Medical Informatics Association, 13(2), 138–147. https://doi.org/10.1136/jamia.2005.0008